The LWS, our local workspace, first so named at reference 4, has plenty of data capacity. But that data has to be self-contained, it has to hold meaning of itself, not by reference to anything else. We have to draw meaning out of the void, in the words of Genesis via Robert Alter, out of the welter and waste and darkness. With the deity starting with a division of lightness from darkness.

While we had defined parts and layer objects on the layers of the LWS in terms of rectangular elements which were linked together by contiguity and by having the same perimeter. See, for example, reference 1. This does provide a vehicle for the representation of all kinds of data, for all kinds of conscious content, but we are no longer sure that it maps very well onto its neural substrate.

In this paper we look at alternatives.

Inter alia, we aim to define:

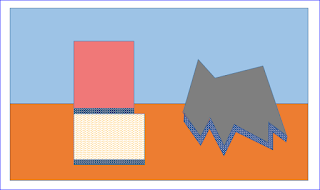

- Background – the blue above. In this example, the background has colour, but it may be something more complicated, just noise, or null. Sometimes, the sky.

- Foreground – the brown below. To use a theatrical metaphor, we might call it the stage and the background the backdrop. The horizon is the line, the boundary between foreground and background. But the foreground may be absent and the horizon may be obscured by other objects.

- Layer objects – or just objects for short, for example, the pink object in the middle. Where there is a foreground, most objects will appear to be resting on it, while some objects, for example the red object, seen against the sky, will clearly not be.

- Parts of objects – an object does not have to be divided into parts, but the green object, for example, is made up of three contiguous parts. Note: coloured in shades of green for presentation here. But the LWS does not use colour to delineate either objects or parts. There is no colour in there, any more than there is in the brain. In both places some kind of proxy is needed – with exactly what kind of a proxy being very much a present interest.

- The order of objects – the pink object, for example, is in front of the grey object.

- The texture of objects and the parts of objects – coded for by the values of the cells of their interiors. Typically at least hundreds of them, in the case of larger parts, thousands of them. Notes: 1) are the cells of an interior talking about sight, sound or what? Which of the many coding schemes are we going to use? 2) remembering here that the object may have no such texture; it may have no more than its shape; and 3) most of the objects above have been coloured for presentation here. But the yellow object has been given some Powerpoint texture.

At reference 1, we defined parts as assemblies of contiguous rectangles of cells, rectangles all having the same shape and the same perimeter. And layer objects as assemblies of contiguous parts – including the case when there was just one part, otherwise when the layer object had no distinct parts. The bit that we are changing here is the foundation, the rectangles. But we hold to the idea that all the data in the LWS is expressed spatially, leveraging the sophisticated machinery developed by vertebrates for processing the data arriving on two dimensional retinas.

Initially, set out our ideas in a reasonably informal way. A more formal version, tidying up the various loose ends will follow.

We first consider all those cells taking the high value.

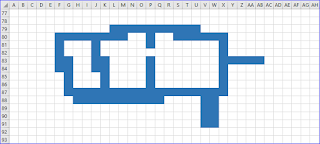

We suppose the snap above shows all the high valued cells on our layer. We have there, according to rules to be set down in due course, defined one layer object with three parts (left) and one with one part (right). Each part has an interior. In the absence of a foreground, the whole of the region outside the two layer objects is the background.

There is a certain amount of redundancy in the definition of these parts, including the two appendages to the right of the left hand object. And there are any number of ways to define any given interior, subject only to the need for space for other objects. Note also that we have more flexibility here in the shape of parts and objects than we had in the rectangular days of reference 1.

Rule: where two high valued cells are adjacent, vertically or horizontally but not diagonally, they are said to be neighbours. A high valued cell with no neighbours is called an isolated cell and for the moment we exclude any such from consideration.

We have now slid the right hand object under the left hand object. The yellow low valued cells mark the fact that the right object is not a fourth part of the left hand object, rather a second object behind or underneath (from the point of view of the host of the LWS) the first.

We have now marked in various patterns of green the interiors of the three parts of the left hand object and in a plain green that of the right hand object.

Holes and layers

In the snap above, not terribly clear given the constraints of Excel on the screen of a laptop, we apparently have a stack of three objects defined by the blue high values. What we actually have is a red object in two parts, a left hand part and a right hand part, on top of a much larger green object, this last with a rather ragged right hand edge. With the difference that the red object has a hole in it, allowing an otherwise occluded part of the green object to show through. Perhaps a tree with a patch of sky showing through it. Still remembering that defining objects by colour may be convenient here, but is not an option available to the compiler.

Given the rules so far, the snap would contain three objects, two green and one red, with the bands of yellow low values telling us that the red object was in front of both the green objects.

We deal with this by making use of an additional, adjacent layer to give us another view, to link the two green layer objects together. There will be other circumstances when it is convenient to make use of such an additional layer, but we do not explore the issue further here. See reference 3 for more thoughts on the use of layers.

Texture in space

Having defined parts and objects in space, as shapes in space, the interiors of those parts can be null, taking little more than low values everywhere, but will usually be given what we will call texture, perhaps colour, texture being something that persists, repeats in space. The extent to which the values of the cells of a part contain signal, are not just white noise. We associate here to Kolmogorov complexity, which touches on this very point. Can the object be completely described in some smaller compass than that of the object itself? Maybe it is the fact of repetition which makes it accessible to the subject, makes it conscious.

Parts and their textures are the basic unit of consciousness, brought out by the activation processes. And while textures may vary across a part, a part remains a whole, with the variation smoothed out, to some extent, by the integrative action of the activation processes. But if that variation goes far enough, a high value line may emerge to split that part into two.

There are various ways in which one might provide such texture. For example:

- Patterns, such as horizontal or vertical stripes over the part in question. Of varying widths and values. Note: uniform vertical stripes cells taking the value 5, with low values in between. In this case the pattern has the value 5, then we have a value for the width of the stripes and a value for the separation of the stripes. Each pattern of regular stripes thus codes for an integer triple

- More varied patterns, perhaps just a collection of lines drawn out in the values of cells across the part. But would the sort of random collection of lines inside a blue part and snapped below count as information? Note: are we to allow an interest in the parts of the parts?

- Value densities. Varying mixtures of cells according to their value, along the lines of red, green blue stimulation of the retina in the case of coloured light. Notes: 1) all the cells might take the value 1 (for red), 2 (for green), 3 (for blue) or low value (for black or absence of colour). Then we suppose the perceived colour at any particular cell to be the product of the amount of red, green and blue in its neighbourhood, appropriately weighted; 2) replacing here a numeric value for red, as can be used, for example, in Powerpoint, by the number of cells which are coded for red; 3) all the cells might take the value 7 or the low value. The texture is the varying density of 7’s across the part. There may be no variation. There may be a gradient across the part; 4) or one might look at the densities of all the possible values of cells; and 5) other things being equal, one needs more space the more complicated one gets. So with lots of complication one can have less variation in space, variation which is not usually needed with something like smell, for which we do not have a strong sense of either position or variation in space

- Patterns built on a scattering of high value cells. This option is described in more detail below.

But such wheezes, while perhaps intuitive, are wasteful in that one is using up a property of a point to tell one about a property of a space, be that a part, an object or a layer. Are there properties of layers which could conveniently be used to define its mode? The mean or median of the values of its cells? The variance of those values? Some variation of auto-correlation to suit the present circumstances? Some special part of the layer which contained data about the layer as a whole?

However, while perhaps space efficient, it is less clear how the proposed activation processes might combine a property of a layer with the properties of groups of cells to deliver the consciousness of green over a part or an object. Having that greenness totally reside in each and every green part is more plausible.

High value cells

Here we build texture on the isolated high value cells that a part contains, isolated points for short, hereby reinstated and shown in brown in the snap which follows.

Rule: we define the value of an isolated point in the interior of a part and call it an isolated value. That value is the sum of the values of surrounding cells of the interior, excluding other high value cells and weighted by the inverse of the distance, perhaps the square of the distance from the isolated point. Note that an interior cell which is not itself an isolated point can participate in more than one isolated value.

This definition can easily be extended to an isolated point in the background or foreground, perhaps limiting the sum to cells within at most some constant defined distance. Something which might turn out to be a good idea in an interior.

But how do we know what sort of a part it is? Whether that isolated value relates to sight, sound, smell or whatever? Before, we had the perimeters which could carry that sort of information, so what do we use now?

Rule: on this option, all the isolated values of a part are about the same sort of thing. They all take values in the same range, be that range of sight, sound, smell or whatever. One might well go further, and say that all the isolated values of a layer are about the same sort of thing. With few isolated points meaning little information. With the breakdown of this rule perhaps being mixed up with synaesthesia.

With part of the attraction being that using isolated points in this sort of way seems well able to cope with a certain amount of noise. The conscious experience is not going to be that sensitive to their precise positions or indeed to their presence or absence, just so long as most of them are there, in roughly the right place.

There are then various wheezes which one might use to qualify the simple numeric value. One might have regard to the size or shapes of the parts. One might have regard to the pattern, rather than the sum, of values of neighbouring cells, along the lines of the snap above, with green for higher values and yellow for lower values. In sum, in one way or another it seems that we could code up plenty of data on our isolated points.

Options not taken forward

The values of the cells of layers are going to very in time as well as space, variations which could also be used to deliver texture. However, we have, for other reasons, opted for relatively static frames – see, for example, reference 2 – and we do not pursue texture in time, at least not for the time being.

We thought about defining objects using high value and parts using penultimate value, that is to say the largest value less than high value. This had the attraction of making parts like, but different to objects. Nevertheless, we decided against.

We thought about allowing dotted lines, to allow parts that were almost connected to each other, with a number of wheezes coming to mind, for example softening a high value border line with some low values. We decided against, mainly on the grounds that, first, what the compiler presents to consciousness is not reality, but a calculated, computed compromise between a nice tidy diagram and reality, with part of tidiness being that something is either a boundary between two parts or not. And second, where the two sides of a part differ, that difference can be expressed in differences of texture.

Another sort of compromise is illustrated above, where what was originally two parts to the right have become one part, with things so arranged that there is a sense of the activation processes squeezing through the gap as they scan the part.

Given that many of the scenes that we will want to express in the LWS will involve gravity, with objects either floating in the air, flying through the air, sitting on the ground or sitting on each other, and that which of these is intended is not always clear from layers of the sort illustrated at the beginning of this paper, we might like to mark such sitting in some way, probably at the cost of some complication to the borders between objects, possibly needing another special value.

In the snap above we have marked the bottoms of objects actually resting directly on that visible below with narrow objects with textured interiors – a bit messy – and possibly not very true – in the case of objects which do not have flat bottoms. And, it might work better to mark objects which do not rest despite being contiguous, being rather fewer in number than those which do so rest. In any event, we have illustrated what can be done with a bit of ingenuity.

While always remembering that what we actually have in one of our parts is an oddly shaped two dimensional array of small non negative integers. With no colour, possibly a lot more messy than that shown above and with the assumption that various sorts of small repeating patterns can be used as a proxy for the likes of colour and textures in colour.

Another idea which is not taken further forward at this time.

Other odds and ends

Suppose the brain decides on some way of coding for green in the layers of the LWS. Perhaps our familiarity with green is reflected in the ease with which the compiler makes the necessary connections. The compiler has to learn to do this, and as we become familiar with green it gets good at it. It gets so that it can make something green in a twinkling – and sometimes it is right so to do, sometimes wrong.

We also suppose that all brains decide on roughly the same way of coding for green, or anything else of an everyday variety. Maybe this results from, for example, coding constraints arising from the nature of colour and the way that information about colour is delivered to the brain. Maybe this is something with which we are born, a product of genes rather than learning.

Conclusions

There are clearly other ways to include data on our layers; we are not stuck with the soft centred patterns. But a lot more work is needed before one can make a reasoned choice between them – rather than a guess.

References

Reference 1: http://psmv3.blogspot.co.uk/2017/03/soft-centred-patterns.html.

Reference 2: http://psmv3.blogspot.co.uk/2017/06/on-scenes.html.

Reference 3: http://psmv3.blogspot.co.uk/2017/04/a-ship-of-line.html.

Reference 4: http://psmv3.blogspot.co.uk/2017/06/on-scenes.html.

Reference 5: http://psmv3.blogspot.co.uk/2017/04/its-chips-life.html.

Group search key: src.

No comments:

Post a Comment